Prize winning talk at AGI conference 2019!

The presentation Toward an efficient AIXI approximation given the properties of our universe has been awarded the Kurzweil Prize for the Best AGI Idea 2019 !

Talk at AI Journey 2019 in Moscow

Our approach to artificial general intelligence

Our presentation at GOOD AI (Prague) Topic: Our approach to artificial general intelligence

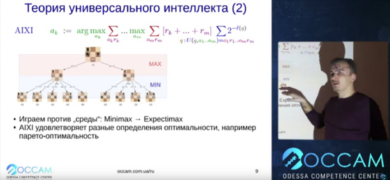

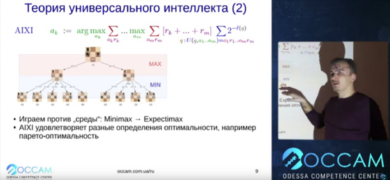

Recent lectures on AGI by Dr. Arthur Franz (in russian)

For our laboratory the year had begun auspiciously by a succession of introductory lectures held in Odessa.Those of our readers who speak Russian can watch...

Emergence of attention mechanisms during compression

It just dawned on me. When we want to compress, we have to do it in one or the other incremental fashion, arriving in description...

Аппроксимация теории универсального интеллекта с помощью инкрементного сжатия информации

7 февраля 2018 года, в 14:30, в главном корпусе ОНУ (Дворянская, 2), ауд. 47 (1 этаж) состоялось очередное заседание Одесского семинара по дискретной математике, организованное совместно Одесским национальным университетом...

На пути к сверхинтеллекту

22 января в Терминале 42 прошло уникальное событие : знакомство с частной некоммерческой исследовательской лабораторией OCCAM и лекция, в рамках которой основатель OCCAM, Артур Франц, рассказал слушателям...

Hierarchical epsilon machine reconstruction

Having read this long paper by James P. Crutchfield (1994) “Calculi of emergence”, we have to admit, that it is very inspiring. Let’s think about...

Universal approximators vs. algorithmic completeness

Finally, it has dawned on us. A problem that we had troubles conceptualizing is the following. On the one hand, for the purposes of universal...

The merits of indefinite regress

The whole field of machine learning, and artificial intelligence in general, is plagued by a particular problem: the well known curse of dimensionality. In a...

Using features for the specialization of algorithms

A widespread sickness of present “narrow AI” approaches is the almost irresistible urge to set up rigid algorithms that find solutions in an as large...

The physics of structure formation

The entropy in equilibrium thermodynamics is defined as , which always increases in closed systems. It is clearly a special case of Shannon entropy ....

Incremental compression

A problem of the incremental approach is obviously local minima in compression. Is it possible that the probability to end up in a local minimum...

Scientific progress and incremental compression

Why is scientific progress incremental? Clearly, the construction of increasingly unified theories in physics and elsewhere is an example incremental compression of experimental data, of...

Recursive unpacking of programs

One idea that we have been following is the idea of simple but deep sequences. Simple in terms of Kolmogorov complexity and deep in terms...

Learning spatial relations

The big demonstrator that we have in mind is the ability to talk about some line drawing scene, after having extracted various objects from it...

Extraction of orthogonal features

The lesson from those considerations is the need for features. Each of those relations does not fix the exact position of the object but rather...

Extending the function network compression algorithm

So, what are the next steps? We should expand our attempts with the function network. That, it seems, is the best path. One thing that...